rss

Pivale: Using Agentic AI Without Vibe Coding

rss

ComputerMinds.co.uk: Drupal 11 Upgrade Gotchas - Slick Carousel

A bit of background

Even as a seasoned Drupal developer, when upgrading a Drupal 10.x site to Drupal 11.x you can still encounter a number of weird issues with some older legacy code on the site, which had previously (unbeknownst to you) relied on functionality that has now changed in some way, shape or form due to the upgrade to Drupal Core and its dependencies.

I've just gone through a long morning of debug hell with some JavaScript functionality on a client's site that has previously not had any issues throughout the last few Drupal upgrades. For context, this site was originally built as a Drupal 7 site (all those years ago!), has been entirely rebuilt and migrated to Drupal 9, upgraded to Drupal 10 and now is finally being upgraded to Drupal 11(.3).

The site has a fair number of different JavaScript powered Carousels on the site, and at the time of building the site originally, the 'in vogue' solution for responsive carousels was the excellent Slick Carousel (Github link). I won't go into too many details about this package here, but it's worked well and hasn't caused any issues over the years with previous Drupal upgrades.

The package is dependent on jQuery, and with the move to jQuery 4.x in Drupal core (an optional dependency!), this is where the problems started. Now, it's unfair to expect a package with the last official release of nearly 9 years ago to magically work with a newer version of jQuery which didn't exist at the time (the latest version in 2017 was 3.2 but the plugin was designed around jQuery 2.x), but with the aim of trying to keep within budget for this Drupal 11 upgrade, it was decided to not rewrite the entire functionality of the carousels on this site.

This would have involved having to implement an entirely new (ideally non jQuery dependent!) plugin such as Swiper or Glider and re-writing the DOM structure in all the Twig templates that contain the markup for each of the carousels, tweaking the styling for each carousel, and then re-writing the various JavaScript code to work with the new chosen plugin. If the site only had a simple type of carousel in a singular place, then swapping out would have been a suitable option but the carousels on the site in question are quite complex in some instances, so I decided to go with trying to make Slick work.

The initial problem

Even though there hasn't been an official release of the package since October 2017, there has been work in the master branch in the last few years to make the plugin work with more modern jQuery versions. The previous upgrade to use jQuery 3.x in Drupal core (versions 8.5 and above) didn't actually cause any noticeable issues with the Slick plugin (at least in our use case), but the version 4 upgrade as part of Drupal 11 finally caused us issues.

jQuery 4 has finally removed a number of deprecated APIs, one of which is jQuery.type, which is what Slick used internally in multiple parts of it's code.. without the function available anymore, of course, the JavaScript blows up! Luckily, there have been a number of commits to the master branch of Slick in the last few years and one in 2022, which fixed these deprecated calls, allowing it to work properly with more modern jQuery versions. The commit in question was designed to fix jQuery 3.x issues, but by swapping out deprecated API calls in time, it's enabled it to work (mostly) with 4.x as well.

So to get the master version in place instead of the latest official release (which is very old), I made the following change to our package.json (the project in question uses npm) in the list of dependencies, changing:

"slick-carousel": "^1.8.1",

to (the latest commit hash in the master branch):

"slick-carousel": "github:kenwheeler/slick#279674a815df01d100c02a09bdf3272c52c9fd55",

and then re-installed the projects JS dependencies to bring the new version in.

(For reference, we have some code that will take the version installed here into the node_modules folder and copy it into our sites custom theme directory in an appropriate folder, we then define this as a custom Drupal library and include only where needed.)

With the latest version in place, the JS error with the previously used deprecated API's was gone, yay! But now we had other issues to worry about.

Further problems

The first thing I noticed, now that the JS error was gone, was that a carousel on the homepage looked to be styled incorrectly compared to the version in production. Closer inspection of the DOM revealed that, for some reason now (after not changing any of the invocation calls to the Slick plugin), the slides were now wrapped in two extra divs, instead of the slides themselves getting the slick-slide class (amongst others).

At this point in time, I just assumed this was just a newer behaviour of the updated code of the Slick plugin.. so I set about just making a few quick style changes to the CSS that we had previously not had to take into account of these extra wrapping divs. Later on, I discovered the real reason for these additional wrapping divs... keep reading to find out what it was.

Broken:

Fixed:

This solved this immediate problem, and then I went hunting for the other carousels on the site, which is where things got very interesting (and time-consuming!)

The next carousel's with an apparent problem were on the site's main product page, where one is used on the left hand side (displayed vertically) and acts as a navigation of thumbnails of the 'main' product image gallery that is displayed next to it. The left hand one appeared to be functioning mostly correctly in itself (with a small style issue), but clicking on it would not progress the main slideshow at all! With no JS errors in the console and nothing obviously wrong, cue the debugging rabbit hole....

Going deeper

I won't go into too much detail here of all the paths I went down whilst debugging this but needless to say it involved swapping out the usually minified version of the Slick plugin for the non minified version, using the excellent Chrome JS debugger and stepping through exactly what was going on when Slick was trying to set this carousel up and why it was behaving like it was.

After a while, I finally realised the issue was present when Slick had started initialising itself - after invoking the plugin in the site's code - but before finishing setup. During it's setup, something was going wrong internally with the reference to the slides, which meant they were not copied into the slick-track div and the previously mentioned (new) wrapping divs were there in the DOM, but with no classes on at all.

The JS debugging revealed that the number of $slides being returned from the following Slick code was actually zero!

_.$slides =

_.$slider

.children( _.options.slide + ':not(.slick-cloned)')

.addClass('slick-slide');

This meant the rest of the code that would then do the copying of the slides into the slick-track div (amongst other setup procedures) was failing. But - how could this possibly be? because running some debug code before initialising Slick and checking the number of slides in the DOM was correct...

The devil is in the detail here, and it turns out that the children() selector here is no longer matching my slide container children. But if we didn't change anything about the code that invokes the carousel, why exactly is it broken?

The key lies in the (optional) slide parameter for Slick (which controls the element query to find the slide). The working vertical carousel (amongst others that were working) wasn't using the slide parameter (as the DOM structure for that carousel has the children directly below the slide container), but the broken carousel was using it (due to a specific reason which I won't get into too far into the exact details of, but it involves other markup in the DOM at that specific place for another purpose on the site, so hence needing to specify the selector).

It turns out that if you omit the slide parameter, internally it'll use > div as the selector for slide , and (obviously) if you specify a selector, it'll use that. Because the previous code we had invoking Slick had specified a custom selector, and now (as mentioned above) there were two extra wrapping divs in play, the Slick selector .children( _.options.slide + ':not(.slick-cloned)') was not matching anymore, as my targets for the slide are now inadvertent grandchildren! and after explicitly defining a selector that wasn't the default (> div), it no longer matched.

The real question?

But why are there now two wrapping <div> elements around each slide where previously there were not?

This is the real question that needs answering, now that we understand why the selector wasn't working during the setup for the slides themselves.

By default, Slick has a default setting for the rows of a slideshow of 1 (unless overridden when invoking the plugin). There is internal code inside of Slick in a buildRows() function (called during initialisation of the carousel) that checks if the number of rows is > 0, and if so, it wraps the inner slides in these two divs!

Slick.prototype.buildRows = function() {

var _ = this, a, b, c, newSlides, numOfSlides, originalSlides,slidesPerSection;

newSlides = document.createDocumentFragment();

originalSlides = _.$slider.children();

if(_.options.rows > 0) {

slidesPerSection = _.options.slidesPerRow * _.options.rows;

numOfSlides = Math.ceil(

originalSlides.length / slidesPerSection

);

for(a = 0; a < numOfSlides; a++){

var slide = document.createElement('div');

for(b = 0; b < _.options.rows; b++) {

var row = document.createElement('div');

for(c = 0; c < _.options.slidesPerRow; c++) {

var target = (a * slidesPerSection + ((b * _.options.slidesPerRow) + c));

if (originalSlides.get(target)) {

row.appendChild(originalSlides.get(target));

}

}

slide.appendChild(row);

}

newSlides.appendChild(slide);

}

_.$slider.empty().append(newSlides);

_.$slider.children().children().children()

.css({

'width':(100 / _.options.slidesPerRow) + '%',

'display': 'inline-block'

});

}

};A quick check of setting rows to 0 in the carousel settings confirmed this was indeed the overall problem, and immediately, my carousels looked and behaved in the way they did before the update. The rows setting is designed for when putting Slick in a "grid mode" where you specify how many rows you want and also how many slides per row you want with the slidesPerRow parameter.

But why are most of the carousels now getting their slides wrapped in rows due to buildRows(), even if I haven't changed the rows parameter from its previous default of 1?

There is a slight confusion when looking at the documentation for the plugin, as it specifies the default value is 1, but also specifies "Setting this to more than 1 initializes grid mode. Use slidesPerRow to set how many slides should be in each row." But this is clearly not true, as we can see a commit that is present in the previous version we were using (1.8.1) had changed this check from if(_.options.rows > 1) to if(_.options.rows > 0) without having updated any of the documentation to say so.

... or is it? The final "gotcha" was that after comparing the minified JS provided in release 1.8.1 with the non-minified JS... the minified JS of 1.8.1 does indeed check if rows > 1, not rows > 0, but the non-minified code checks if rows > 0 - so they don't match, doh! 🤦

Minified code excerpt:

l.options.rows > 1) {Non-minified code excerpt:

if(_.options.rows > 0) {The master branch commit I'm running for the jQuery fixes correctly has the minified code matching the un-minified code, both checking rows > 0 - consistency, yey!

What a facepalm moment, eh?

TLDR - The solution:

After running either the updated Slick module code on a Drupal 11.x site that uses jQuery 4.x (or an un-minified 1.8.1 release on an older Drupal site running jQuery 3.x!) If you don't want your carousels to get the extra wrapping divs, which can cause serious selector issues (when you don't really need the 'grid mode' at all!) just pass rows: 0 as an option when initialising your Slick carousel along with the other options, and it'll behave as it did before.

e.g. (a super basic carousel options initialisation)

$('.some-carousel-selector').slick({

arrows: true,

dots: true,

slidesToShow: 3,

slidesToScroll: 3,

rows: 0, // <-- This is the key if you don't need the grid mode!

});If you've made it this far through the article, well done! Hopefully this article saves you from the same few hours of pain that I experienced!

Final thoughts

In hindsight, the amount of time it took to work out exactly what was going on here and write this article up, I could have probably spent getting most of the carousels working with an entirely different plugin and pretty much most of the way there. What should have been a 15-minute job here turned into hours, but sometimes these unexpected things just happen when doing upgrades, especially when some of the code being used is from a different era (2017 wasn't that long ago, was it?) It's sometimes a tricky choice to know when to leave legacy code in place, try and make it work or when it's time to jump ship to another solution.

If it turned out that there were a multitude of Javascript errors with the Slick plugin under jQuery 4.x and no obvious solutions without knowing the inner workings of the plugin, then I probably would have changed tact and started re-implementing the carousels with another solution. But this wasn't the case here, and the issues turned out to be a lot more nuanced.

On the plus side, another project that also needs a Drupal 11 upgrade also uses Slick carousel from a long time ago, so it really should be a 15-minute job on that one with the knowledge gained here :)

rss

Très Bien Blog: Dig for gold in Drupal Contrib code

rss

Dries Buytaert: A better way to follow Drupal development

I've been reading Drupal Core commits for more than 15 years. My workflow hasn't changed much over time. I subscribe to the Drupal Core commits RSS feed, and every morning, over coffee, I scan the new entries. For many of them, I click through to the issue on Drupal.org and read the summary and comments.

That workflow served me well for a long time. But when Drupal Starshot expanded my focus beyond Drupal Core to include Drupal CMS, Drupal Canvas, and the Drupal AI initiative, it became much harder to keep track of everything. All of this work happens in the open, but that doesn't make it easy to follow.

So I built a small tool I'm calling Drupal Digests. It watches the Drupal.org issue queues for Drupal Core, Drupal CMS, Drupal Canvas, and the Drupal AI initiative. When something noteworthy gets committed, it feeds the discussion and diff to AI, which writes me a summary: what changed, why it matters, and whether you need to do anything. You can see an example summary to get a feel for the format.

Each issue summary currently lives as its own Markdown file in a GitHub repository. Since I still like my morning coffee and RSS routine, I also generate RSS feeds that you can subscribe to in your favorite reader.

I built this to scratch my own itch, but realized it could help with something bigger. Staying informed is one of the hardest parts of contributing to a large Open Source project. These digests can help new contributors ramp up faster, help experienced module maintainers catch API changes, and make collaboration across the project easier.

I'm still tuning the prompts. Right now it costs me less than $2 a day in tokens, so I'm committed to running it for at least a year to see whether it's genuinely useful. If it proves valuable, I could imagine giving it a proper home, with search, filtering, and custom feeds.

For now, subscribe to a feed and tell me what you think.

read morerss

The Drop Times: Florida DrupalCamp Begins 20 February in Orlando with Canvas and AI in Focus

rss

Talking Drupal: TD Cafe #015 - Karen & Stephen - Non-Profit Summit at DrupalCon

Join Karen Horrocks and Stephen Musgrave as they introduce the upcoming non-profit summit at DrupalCon 2026 in Chicago. In this comprehensive fireside chat, they explore how AI can be integrated to serve a nonprofit's mission, plus the dos and don'ts of AI implementation. Hear insights from leading nonprofit professionals, learn about the variety of breakout sessions available, and discover the benefits of Kubernetes for maximizing ROI. Whether you're a developer, content editor, or a strategic planner, this session is crucial for understanding the future of nonprofit operations with cutting-edge technology.

For show notes visit: https://www.talkingDrupal.com/cafe015

Topics- Introduction

- Meet Karen & Stephen

- Karen's Journey to Nonprofit Work

- Deep Dive into Drupal and Nonprofit Websites

- Capella's Approach to Continuous Improvement

- Nonprofit Summit Overview

- Exploring Summit Themes: AI and Resiliency

- Digital Sovereignty and Ethical Considerations

- Additional Breakout Sessions and Topics

- Community Engagement and Registration Details

- Conclusion and Final Thoughts

Stephen (he/him) is a co-founder, partner and Lead Technologist at Capellic, an agency that build and maintains websites for non-profits. Stephen is bullish on keeping things simple – not simplistic. His goal is to maximize the return on investment and minimize the overhead in maintaining the stack for the long term.

Stephen has been working with the web for over 30 years. He was initially drawn to the magic of using code to create web art, added in his love for relational databases, and has spent his career building websites with an unwavering commitment to structured content.

When Stephen isn't at his desk, he's often running to and swimming in Barton Springs Pool, getting a bit too wound-up at Austin FC games, and playing Legos with his little one.

Karen HorrocksKaren (she/her, karen11 on drupal.org and Drupal Slack) is a Web and Database Developer for the Physicians Committee for Responsible Medicine, a nonprofit dedicated to saving and improving human and animal lives through plant-based diets and ethical and effective scientific research.

Karen began her career as a government contractor at NASA Goddard Space Flight Center developing websites to distribute satellite data to the public. She moved to the nonprofit world when the Physicians Committee, an organization that she supports and follows, posted a job opening for a web developer. She has worked at the Physicians Committee for over 10 years creating websites that provide our members with the information and tools to move to a plant-based diet.

Karen is a co-moderator of NTEN's Nonprofit Drupal Community. She spoke on a panel at the 2019 Nonprofit Summit at DrupalCon Seattle and is helping to organize the 2026 Nonprofit Summit at DrupalCon Chicago.

ResourcesNonprofit Summit Agenda: https://events.drupal.org/chicago2026/session/summit-non-profit-guests-must-pre-register Register for the Summit (within the DrupalCon workflow): https://events.drupal.org/chicago2026/registration Funding Open Source for Digital Sovereignty: https://dri.es/funding-open-source-for-digital-sovereignty NTEN's Drupal Community of Practice Zoom call (1p ET on third Thursday of the month except August and December): https://www.nten.org/drupal/notes Nonprofit Drupal Slack Channel: #nonprofits on Drupal Slack

GuestsKaren Horrocks - karen11 www.pcrm.org Stephen Musgrave - capellic capellic.com

read morerss

SearXNG - Privacy-First Web Search for Drupal AI Assistants

If you’ve been following the rapid rise of AI‑driven chatbots and ‘assistant‑as‑a‑service’ platforms, you know one of the biggest pain points is trustworthy, privacy‑preserving web search. AI assistants need access to current information to be useful, yet traditional search engines track every query, building detailed user profiles.

Enter SearXNG - an open‑source metasearch engine that aggregates results from dozens of public search back‑ends while never storing personal data. The new Drupal module lets any Drupal‑based AI assistant (ChatGPT, LLM‑powered bots, custom agents) invoke SearXNG directly from the Drupal site, bringing privacy‑first searching in‑process with your content.

What is SearXNG?

SearXNG aggregates results from up to 247 search services without tracking or profiling users. Unlike Google, Bing or other mainstream search engines, SearXNG removes private data from search requests and doesn't forward anything from third-party services.

Think of it as a privacy-preserving intermediary: your query goes to SearXNG, which then queries multiple search engines on your behalf and aggregates the results, all while keeping your identity completely anonymous.

The Drupal SearXNG Module

The Drupal SearXNG module brings this privacy-focused search capability directly into the Drupal ecosystem. It connects Drupal with your preferred SearXNG server (local or remote), includes a demonstration block, and provides an additional submodule that integrates SearXNG with Drupal AI by offering an AI Agent Tool.

This integration is particularly powerful when combined with Drupal's growing AI ecosystem, including the AI module framework, AI Agents and AI Assistants API.

Key Benefits

Privacy by design

The most compelling benefit is complete privacy protection. When your Drupal AI assistant uses SearXNG to search the web:

- No user tracking or profiling occurs

- Search queries aren't stored or analysed

- IP addresses remain private

- No targeted advertising based on searches

- Compliance with privacy regulations like GDPR

This makes it ideal for organisations in healthcare, government, education and any sector where data privacy is paramount.

Comprehensive search results

By aggregating results from up to 247 search services, SearXNG provides more diverse and comprehensive search results than relying on a single search engine. Your AI assistant gets a broader perspective, potentially finding information that might be missed by individual search engines.

Self-hosted control

Organisations can run their own SearXNG instance, giving them complete control over:

- Which search engines to query

- Rate limiting and usage patterns

- Data residency requirements

- Custom configurations and preferences

- Complete audit trails

Getting started is remarkably straightforward thanks to SearXNG's official Docker image, which makes launching a local server as simple as running a single command. This means organisations can have their own private search instance running in minutes, without complex server configuration or dependencies.

Seamless AI integration

The module's AI Agent Tool integration means that Drupal AI assistants can seamlessly incorporate web search into their workflows. Whether it's a chatbot helping users navigate your site or an AI assistant helping content creators research topics, web search becomes just another capability in the assistant's toolkit.

Practical Use Cases

Internal knowledge assistants

Imagine a corporate intranet where employees use an AI assistant to find both internal documentation and external resources. The assistant can search your internal Drupal content while using SearXNG to find external information, all while maintaining complete privacy about what employees are researching.

Privacy-conscious educational platforms

Universities and schools increasingly need to protect student privacy. A Drupal-powered learning management system with an AI tutor can use SearXNG to help students research topics without creating profiles of their academic interests and struggles.

Government and public sector portals

Government organisations can leverage AI assistants to help citizens find information and services. Using SearXNG ensures that citizen queries remain private and aren't used for commercial purposes.

The Future of Privacy-First AI

The SearXNG Drupal module represents an important step forward in building AI systems that respect user privacy. As AI assistants become more prevalent in web applications, the ability to access current information without compromising privacy will become increasingly valuable.

Drupal's AI framework supports over 48 AI platforms, providing flexibility in choosing AI providers. By combining this with privacy-respecting search through SearXNG, organisations can build powerful, intelligent applications that align with growing privacy expectations and regulations.

Conclusion

Privacy and powerful AI don't have to be mutually exclusive. The SearXNG Drupal module proves that organisations can build intelligent, helpful AI assistants that respect user privacy. Whether you're building internal tools, public-facing applications, or specialised platforms, this module provides a foundation for privacy-first AI that can search the web without compromising user trust.

As data privacy regulations continue to evolve and users become more aware of digital privacy issues, tools like the SearXNG module will become increasingly essential. By adopting privacy-first approaches now, organisations can build user trust while delivering the intelligent, helpful experiences that modern web applications demand.

Find out more and download on the dedicated SearXNG Drupal project page.

rss

Drupal AI Initiative: SearXNG - Privacy-First Web Search for Drupal AI Assistants

If you’ve been following the rapid rise of AI‑driven chatbots and ‘assistant‑as‑a‑service’ platforms, you know one of the biggest pain points is trustworthy, privacy‑preserving web search. AI assistants need access to current information to be useful, yet traditional search engines track every query, building detailed user profiles.

Enter SearXNG - an open‑source metasearch engine that aggregates results from dozens of public search back‑ends while never storing personal data. The new Drupal module lets any Drupal‑based AI assistant (ChatGPT, LLM‑powered bots, custom agents) invoke SearXNG directly from the Drupal site, bringing privacy‑first searching in‑process with your content.

What is SearXNG?

SearXNG aggregates results from up to 247 search services without tracking or profiling users. Unlike Google, Bing or other mainstream search engines, SearXNG removes private data from search requests and doesn't forward anything from third-party services.

Think of it as a privacy-preserving intermediary: your query goes to SearXNG, which then queries multiple search engines on your behalf and aggregates the results, all while keeping your identity completely anonymous.

The Drupal SearXNG Module

The Drupal SearXNG module brings this privacy-focused search capability directly into the Drupal ecosystem. It connects Drupal with your preferred SearXNG server (local or remote), includes a demonstration block, and provides an additional submodule that integrates SearXNG with Drupal AI by offering an AI Agent Tool.

This integration is particularly powerful when combined with Drupal's growing AI ecosystem, including the AI module framework, AI Agents and AI Assistants API.

Key Benefits

Privacy by design

The most compelling benefit is complete privacy protection. When your Drupal AI assistant uses SearXNG to search the web:

- No user tracking or profiling occurs

- Search queries aren't stored or analysed

- IP addresses remain private

- No targeted advertising based on searches

- Compliance with privacy regulations like GDPR

This makes it ideal for organisations in healthcare, government, education and any sector where data privacy is paramount.

Comprehensive search results

By aggregating results from up to 247 search services, SearXNG provides more diverse and comprehensive search results than relying on a single search engine. Your AI assistant gets a broader perspective, potentially finding information that might be missed by individual search engines.

Self-hosted control

Organisations can run their own SearXNG instance, giving them complete control over:

- Which search engines to query

- Rate limiting and usage patterns

- Data residency requirements

- Custom configurations and preferences

- Complete audit trails

Getting started is remarkably straightforward thanks to SearXNG's official Docker image, which makes launching a local server as simple as running a single command. This means organisations can have their own private search instance running in minutes, without complex server configuration or dependencies.

Seamless AI integration

The module's AI Agent Tool integration means that Drupal AI assistants can seamlessly incorporate web search into their workflows. Whether it's a chatbot helping users navigate your site or an AI assistant helping content creators research topics, web search becomes just another capability in the assistant's toolkit.

Practical Use Cases

Internal knowledge assistants

Imagine a corporate intranet where employees use an AI assistant to find both internal documentation and external resources. The assistant can search your internal Drupal content while using SearXNG to find external information, all while maintaining complete privacy about what employees are researching.

Privacy-conscious educational platforms

Universities and schools increasingly need to protect student privacy. A Drupal-powered learning management system with an AI tutor can use SearXNG to help students research topics without creating profiles of their academic interests and struggles.

Government and public sector portals

Government organisations can leverage AI assistants to help citizens find information and services. Using SearXNG ensures that citizen queries remain private and aren't used for commercial purposes.

The Future of Privacy-First AI

The SearXNG Drupal module represents an important step forward in building AI systems that respect user privacy. As AI assistants become more prevalent in web applications, the ability to access current information without compromising privacy will become increasingly valuable.

Drupal's AI framework supports over 48 AI platforms, providing flexibility in choosing AI providers. By combining this with privacy-respecting search through SearXNG, organisations can build powerful, intelligent applications that align with growing privacy expectations and regulations.

Conclusion

Privacy and powerful AI don't have to be mutually exclusive. The SearXNG Drupal module proves that organisations can build intelligent, helpful AI assistants that respect user privacy. Whether you're building internal tools, public-facing applications, or specialised platforms, this module provides a foundation for privacy-first AI that can search the web without compromising user trust.

As data privacy regulations continue to evolve and users become more aware of digital privacy issues, tools like the SearXNG module will become increasingly essential. By adopting privacy-first approaches now, organisations can build user trust while delivering the intelligent, helpful experiences that modern web applications demand.

Find out more and download on the dedicated SearXNG Drupal project page.

rss

Capellic: Stephen appears on TD Cafe: What to expect at the Nonprofit Summit at DrupalCon Chicago

rss

A Drupal Couple: The Blueprint for Affordable Drupal Projects

For years we have been talking about how Drupal got too expensive for the markets we used to serve. Regional clients, small and medium businesses in Latin America, Africa, Asia, anywhere where $100,000 websites are simply not a reality. We watched them go to WordPress. We watched them go to Wix. Not because Drupal was worse, but because the economics stopped working.

That conversation is changing.

Drupal CMS 2.0 landed in January 2026. And with it came a set of tools that, combined intelligently, make something possible that was not realistic before: an affordable, professional Drupal site delivered for $2,000, with margin, for markets that could not afford us before.

I want to show you the math. Not to sell you a fantasy, but because I did the exercise and the numbers work. And I am being conservative.

What changed

The real budget killer was always theming. Getting a site to look right, behave right, be maintainable, took serious senior hours. That is where budgets went.

Recipes pre-package common configurations so you are not starting from zero. Canvas lets clients and site builders assemble and manage pages visually once a developer sets up the component library.

Dripyard brings professional Drupal themes built specifically for Canvas (although works with Layout Builder, Paragraphs, etc), with excellent quality and accessibility, at around $500. While that seems expensive, the code quality, designs, and accessibility are top notch and will save at least 20 hours (and usually much more), which would easily eat up a small budget.

Three tools. One problem solved.

We proved the concept about a month ago with laollita.es, built in three days using Umami as a starting point. Umami as a version 0.5 of what a proper template should be. Drupal AI for translations, AI-assisted development for CSS and small components. Without formal templates. With proper ones, it gets faster.

The $2,000 blueprint

Scope first. Most small business sites are simple: services, about us, blog, team, contact. The moment you add custom modules or complex requirements, the budget goes up. This blueprint is for projects that accept that constraint.

Start with Drupal CMS and a Dripyard theme. Recipes handle the configuration. Add AI assistance, a paid plan with a capable model, Claude runs between $15 and $50 depending on usage. Let it help you move faster, but supervise everything. The moment you stop reviewing AI decisions is the moment quality starts leaking.

For hosting, go with a Drupal CMS-specific provider like Drupito, Drupal Forge, or Flexsite, around $20 to $50 per month. Six months included for your client is $300. Those same $300 could go toward a site template from the marketplace launching at DrupalCon Chicago in March 2026, compressing your development time further.

With a constrained scope, the right tools, and AI under supervision, ten hours of net work is realistic. At LATAM-viable rates, $30 per hour on the high side, that is $300 in labor.

The cost breakdown: $500 theme, $300 hosting or template, $300 labor, $50 AI tools. Total: $1,150. Add a $300 buffer and you are at $1,450. Charge $2,000. Your profit is $550, a 27.5% margin.

And I am being conservative. As you build experience with the theme, develop your own component library, and refine your tooling, the numbers improve. The first project teaches you. The third one pays better.

The $1,000 path

Smaller budget, smaller scope. Start with Byte or Haven, two Drupal CMS site templates on Drupal.org, or generate an HTML template with AI for around $50. A site template from the upcoming marketplace will run around $300.

The math: $300 starting point, $150 for three months of hosting, $200 incidentals. Cost: $450. Charge $1,000. Margin: 35%.

A $1,000 project is a few pages, clear scope, no special requirements. Both you and the client have to be honest about that upfront.

The real value for your client

When a client chooses Wix or WordPress to save money, they are choosing a ceiling. The day they need more, they are either rebuilding from scratch or paying for plugins and extras that someone still has to configure, maintain, and update every time the platform breaks something.

A client on Drupal CMS is on a platform that grows with them. The five-page site today can become a complex application tomorrow, on the same platform, without migrating. That is the conversation worth having. Not just what they get today, but what they will never have to undo.

The tools are there

The market in Latin America, Africa, Asia, and similar regions was always there. We just did not have the tools to serve it profitably. Now we do.

Drupal CMS, Canvas, Recipes, Dripyard, Drupal CMS-specific hosting, AI assistance with human oversight. The toolkit exists. Get back on trail.

Add new comment

rss

DDEV Blog: DDEV February 2026: v1.25.0 Ships, 72% Market Share, and New Training Posts

DDEV v1.25.0 is here, and the community response has been strong. This month also brought three new training blog posts and a survey result that speaks for itself.

What's New

- DDEV v1.25.0 Released → Improved Windows installer (no admin required), XHGui as default profiler, updated defaults (PHP 8.4, Node.js 24, MariaDB 11.8), faster snapshots with zstd compression, and experimental rootless container support. Read the release post↗

- New

ddev shareProvider System → Free Cloudflare Tunnel support, no login or token required. A modular provider system with hooks and CMS-specific configuration. Read more↗ - Mutagen in DDEV: Functionality, Issues, and Debugging → Based on the January training session, this post covers how Mutagen works, common issues, and the new

ddev utility mutagen-diagnosecommand. Read more↗ - Xdebug in DDEV: Understanding and Troubleshooting Step Debugging → How the reverse connection model works, IDE setup for PhpStorm and VS Code, common issues, and the new

ddev utility xdebug-diagnosecommand. Read more↗

CraftQuest Survey: DDEV at 72%

The 2026 CraftQuest Community Survey↗ collected responses from 253 Craft CMS developers and found DDEV at 72% market share for local development environments. The report notes: "This near-standardization simplifies onboarding for newcomers, reduces support burden for plugin developers, and means the ecosystem can optimize tooling around a single local dev workflow."

Conference Time!

I'll be at Florida Drupalcamp this week, and will speak on how to use git worktree to run multiple versions of the same site. I'd love to see you and sit down and hear your experience with DDEV and ways you think it could be better.

Then in March I'll be at DrupalCon Chicago and as usual will do lots of Birds-of-a-Feather sessions about DDEV and related topics. Catch me in the hall, or let's sit down and have a coffee.

Community Highlights

- ddev-mngr → A Go-based command-line tool with an interactive terminal UI for managing multiple DDEV projects at once — start, stop, check status, and open URLs across projects. With this add-on Olivier Dobberkau inspired a new TUI approach for DDEV core as well! View on GitHub↗

- TYPO3 DDEV Agent Skill → Netresearch built an Agent Skill (compatible with Claude Code, Cursor, Windsurf, and GitHub Copilot) that automates DDEV environment setup for TYPO3 extension development, including multi-version testing environments for TYPO3 11.5, 12.4, and 13.4 LTS. View on GitHub↗

- Using Laravel Boost with DDEV → Russell Jones explains how to integrate Laravel Boost (an official MCP server) with DDEV, giving AI coding agents contextual access to routes, database schema, logs, and configuration. Read on Dev.to↗

- Laravel VS Code Extension v1.4.2 → Now includes Docker integration support and a fix for Pint functionality within DDEV environments. Read more↗

Community Tutorials from Around the World

- Getting Started with DDEV for Drupal Development → Ivan Zugec at WebWash published a guide covering installation, daily commands, database import/export, Xdebug setup, and add-ons. Read on WebWash↗

- Environnement de développement WordPress avec DDEV → Stéphane Arrami shares a practical review of adopting DDEV for WordPress development, covering client projects, personal sites, and training (in French). Read more↗

What People Are Saying

"I was today years old when I found out that DDEV exists. Now I am busy migrating all projects to Docker containers." — @themuellerman.bsky.social↗

"ddev is the reason I don't throw my laptop out of the window during local setup wars. one command to run the stack and forget the rest. simple as that." — @OMascatinho on X↗

v1.25.0 Upgrade Notes and Known Issues

Every major release brings some friction, and v1.25.0 is no exception. These will generally be solved in v1.25.1, which will be out soon. Here's what to watch for:

- deb.sury.org certificate expiration on v1.24.x → The GPG key for the PHP package repository expired on February 4, breaking

ddev startfor users still on v1.24.10 who needed to rebuild containers. We pushed updated images for v1.24.10, so you can eitherddev poweroff && ddev utility download-imagesor just go ahead and upgrade to v1.25.0, which shipped with the updated key. Details↗ - MariaDB 11.8 client and SSL → DDEV v1.25.0 ships with MariaDB 11.8 client (required for Debian Trixie), which defaults to requiring SSL. This can break

drush sql-cliand similar tools on MariaDB versions below 10.11. Workaround: addextra: "--skip-ssl"to yourdrush/drush.ymlundercommand.sql.options, or upgrade your database to MariaDB 10.11+. Details↗

- MySQL collation issues → Importing databases can silently change collations, leading to "Illegal mix of collations" errors when joining imported tables with newly created ones. Separately, overriding MySQL server collation via

.ddev/mysql/*.cnfdoesn't work as expected. #8130↗ #8129↗ - Inter-container HTTP(S) communication → The ddev-router doesn't always update network aliases when projects start or stop, which can break container-to-container requests for

*.ddev.sitehostnames. Details↗ - Downgrading to v1.24.10 → If you need to go back to v1.24.10, you'll need to clean up

~/.ddev/traefik/config— leftover v1.25.0 Traefik configuration breaks the older version. Details↗ - Traefik debug logging noise → Enabling Traefik debug logging surfaces warning-level messages as "router configuration problems" during

ddev startandddev list, which looks alarming but is harmless. Details↗ ddev npmandworking_dir→ddev npmdoesn't currently respect theworking_dirweb setting, a difference from v1.24.10. Details↗

As always, please open an issue↗ if you run into trouble — it helps us fix things faster. You're the reason DDEV works so well!

DDEV Training Continues

Join us for upcoming training sessions for contributors and users.

February 26, 2026 at 10:00 US ET / 16:00 CET — Git bisect for fun and profit Add to Google Calendar • Download .ics

March 26, 2026 at 10:00 US ET / 15:00 CET — Using

git worktreewith DDEV projects and with DDEV itself Add to Google Calendar • Download .icsApril 23, 2026 at 10:00 US ET / 16:00 CEST — Creating, maintaining and testing add-ons 2026-updated version of our popular add-on training. Previous session recording↗ Add to Google Calendar • Download .ics

Zoom Info: Link: Join Zoom Meeting Passcode: 12345

Sponsorship Update

After the community rallied in January, sponsorship has held steady and ticked up slightly. Thank you!

Previous status (January 2026): ~$8,208/month (68% of goal)

February 2026: ~$8,422/month (70% of goal)

If DDEV has helped your team, now is the time to give back. Whether you're an individual developer, an agency, or an organization — your contribution makes a difference. → Become a sponsor↗

Contact us to discuss sponsorship options that work for your organization.

Stay in the Loop—Follow Us and Join the Conversation

Compiled and edited with assistance from Claude Code.

read morerss

The Drop Times: Drupal Core AGENTS.md Proposal Triggers Broader Debate on AI Guardrails

rss

Tag1 Insights: Building the Document Summarizer Tooltip Module with AI-Assisted Coding

At Tag1, we believe in proving AI within our own work before recommending it to clients. This post is part of our AI Applied content series, where team members share real stories of how they're using Artificial Intelligence and the insights and lessons they learn along the way. Here, team member Minnur Yunusov explores how AI-assisted coding helped him rapidly prototype the Document Summarizer Tooltip module for Drupal, while adding AI-generated document previews, improving accessibility, and refining code through real-time feedback.

From Idea to working Drupal prototype with AI-assisted coding

I started with a simple goal: build a working prototype that could summarize linked documents directly in Drupal, without having to spend too much time on it. AI-assisted coding helped me move from idea to an installable module quickly, even though the first versions weren’t perfect. The focus was on getting something functional that I could iterate on, instead of hand-writing every piece from scratch.

The prototype I put together with AI-assisted coding works and can be installed and tested. You can find it on GitHub at https://github.com/minnur/docs_summarizer_tooltip.

Initially, I tried using Cline with Claude Sonnet to generate the module. It produced a full module structure, but the result didn’t actually work in Drupal. JavaScript in particular needed refactoring, so I switched over to Claude Code, which became my main tool for debugging and refining the implementation.

What broke, what worked, and what I fixed with Claude Code

One of the biggest pain points was the tooltip behavior itself. The tooltip wasn’t positioning correctly, which meant the UX felt off and inconsistent. I used Claude Code iteratively to adjust the JavaScript until the tooltip appeared in the right place and behaved in a way that felt natural.

Another issue was that the tooltip wasn’t showing the title as expected. I tracked down the generated function responsible for rendering the header, wired in my own variables, and then asked Claude Code to include that variable in the header output. After that targeted change, the tooltip finally displayed the title properly and felt much closer to what I wanted.

Turning document links into smart, AI-powered tooltips

The core concept of the module is straightforward: detect document links on a page and show an AI-generated summary in a tooltip on hover. It started life as a PDF-only prototype, focused on a single file type so I could validate the idea. Once I had the tooltip behavior working smoothly, with correct positioning, title rendering, and consistent UX, I was ready to expand the scope. I asked Claude Code to refactor the module to support more file types beyond PDFs and rename it to “Document Summarizer Tooltip.”

The refactor mostly worked, but the rename was incomplete. Some files kept the old name and needed manual updates. This was a good reminder that while AI can handle broad changes efficiently, it still needs a human to double-check details across Drupal files and configuration.

Accessibility, ARIA, and making AI summaries usable for everyone

Once the basic behavior was there, I wanted to think about accessibility. A tooltip full of AI-generated content is not very helpful if screen readers or keyboard users can’t access it. I asked the AI to help with adding accessibility considerations as a next step, including ARIA attributes and behavior that would work beyond simple mouse hover.

The initial AI-generated settings form went a bit overboard and included more fields than I actually needed. That said, it did a good job of covering a lot of reasonable options. From there, I was able to prune back the form to something simpler and more focused, which also made the UI easier to understand and configure.

What AI got right (and what still needed review)

One thing that stood out to me was how well the AI handled some of the integration details. It added Drupal AI integration and CSRF token support with almost no issues, which saved a lot of time. It also recognized variables I introduced and reused them correctly across functions, which made iterations smoother.

At the same time, the generated code was not something I could just drop in without reading. A few Drupal API calls looked right on the surface but weren’t actually real. That required a thorough review and manual fixes. I didn’t have time to add unit tests for this prototype, but in the future I’d like to see how well AI can help suggest or scaffold tests alongside code changes.

How clients can use AI for prototyping, accessibility, and tests

There are a few clear ways clients could apply this approach. First, AI-assisted coding is very effective for rapid prototyping, especially when you need to validate a module concept before committing a lot of engineering time. Second, using AI to help with accessibility improvements in templates can speed up the process of making interfaces more inclusive.

Finally, I see a lot of potential in using tools like Claude Code to support test creation and maintenance. While I didn’t get to that stage on this project, generating tests, fixing contributed modules, and experimenting with code improvements all look like strong fits for this kind of workflow. The Document Summarizer Tooltip itself could also be directly useful on content-heavy sites that want instant, inline document previews.

If you’d like to explore the code or try the module yourself, the prototype is available on GitHub at https://github.com/minnur/docs_summarizer_tooltip.

This post is part of Tag1’s This post is part of our AI Applied content series content series, where we share how we're using AI inside our own work before bringing it to clients. Our goal is to be transparent about what works, what doesn’t, and what we are still figuring out, so that together, we can build a more practical, responsible path for AI adoption.

Bring practical, proven AI adoption strategies to your organization, let's start a conversation! We'd love to hear from you.

read morerss

February 2026 Drupal for Nonprofits Chat

Join us THURSDAY, February 19 at 1pm ET / 10am PT, for our regularly scheduled call to chat about all things Drupal and nonprofits. (Convert to your local time zone.)

We don't have anything specific on the agenda this month, so we'll have plenty of time to discuss anything that's on our minds at the intersection of Drupal and nonprofits. Got something specific you want to talk about? Feel free to share ahead of time in our collaborative Google document at https://nten.org/drupal/notes!

All nonprofit Drupal devs and users, regardless of experience level, are always welcome on this call.

This free call is sponsored by NTEN.org and open to everyone.

Information on joining the meeting can be found in our collaborative Google document.

rss

Nonprofit Drupal posts: February 2026 Drupal for Nonprofits Chat

Join us THURSDAY, February 19 at 1pm ET / 10am PT, for our regularly scheduled call to chat about all things Drupal and nonprofits. (Convert to your local time zone.)

We don't have anything specific on the agenda this month, so we'll have plenty of time to discuss anything that's on our minds at the intersection of Drupal and nonprofits. Got something specific you want to talk about? Feel free to share ahead of time in our collaborative Google document at https://nten.org/drupal/notes!

All nonprofit Drupal devs and users, regardless of experience level, are always welcome on this call.

This free call is sponsored by NTEN.org and open to everyone.

Information on joining the meeting can be found in our collaborative Google document.

rss

Specbee: 8 Critical considerations for a successful Drupal 7 to 10/11 migration

rss

Metadrop: Managing Drupal Status Report requirements

You know the drill. You visit the Drupal Status Report to check if anything needs attention, and you're greeted by a wall of warnings you've seen dozens of times before.

Some warnings are important. Others? Not so much. Maybe you're tracking an update notification in your Gitlab and don't need the constant reminder. Perhaps there's a PHP deprecation notice you're already aware of and planning to address during your next scheduled upgrade. Or you're seeing environment-specific warnings that simply don't apply to your infrastructure setup.

The noisy status report problem

The problem is that all these warnings sit alongside genuine issues that actually need your attention. The noise drowns out the signal. You end up scrolling past the same irrelevant messages every time, increasing the chance you'll miss something that matters.

Over time, you develop warning blindness. Your brain learns to ignore the status report page entirely because the signal-to-noise ratio is too low. Then, when a genuine security update appears or a database schema issue emerges, it gets lost in the familiar sea of orange and red.

This problem multiplies across teams. Each developer independently decides which warnings to ignore. New team members have no way to know which warnings matter and which ones are environmental noise. The status report becomes unreliable, defeating its entire purpose.

… read morerss

DDEV Blog: Xdebug in DDEV: Understanding, Debugging, and Troubleshooting Step Debugging

For most people, Xdebug step debugging in DDEV just works: ddev xdebug on, set a breakpoint, start your IDE's debug listener, and go. DDEV handles all the Docker networking automatically. If you're having trouble, run ddev utility xdebug-diagnose and ddev utility xdebug-diagnose --interactive — they check your configuration and connectivity and tells you exactly what to fix.

This post explains how the pieces fit together and what to do if things do go wrong.

The Quick Version

ddev xdebug on- Start listening in your IDE (PhpStorm: click the phone icon; VS Code: press F5)

- Set a breakpoint in your entry point (

index.phporweb/index.php) - Visit your site

If it doesn't work:

ddev utility xdebug-diagnose

Or for guided, step-by-step troubleshooting:

ddev utility xdebug-diagnose --interactive

The diagnostic checks port 9003 listener status, host.docker.internal resolution, WSL2 configuration, xdebug_ide_location, network connectivity, and whether Xdebug is loaded. It gives actionable fix recommendations.

How Xdebug Works

Xdebug lets you set breakpoints, step through code, and inspect variables — interactive debugging instead of var_dump().

The connection model is a reverse connection: your IDE listens on port 9003 (it's the TCP server), and PHP with Xdebug initiates the connection (it's the TCP client). Your IDE must be listening before PHP tries to connect.

:::note The Xdebug documentation uses the opposite terminology, calling the IDE the "client." We use standard TCP terminology here. :::

How DDEV Makes It Work

DDEV configures Xdebug to connect to host.docker.internal:9003. This special hostname resolves to the host machine's IP address from inside the container, so PHP can reach your IDE across the Docker boundary.

The tricky part is that host.docker.internal works differently across platforms. DDEV handles this automatically:

- macOS/Windows: Docker Desktop and Colima provide

host.docker.internalnatively - Linux: DDEV uses the docker-compose host gateway feature

- WSL2: DDEV determines the correct IP based on your configuration

You can verify the resolution with:

ddev exec getent hosts host.docker.internal

DDEV Xdebug Commands

ddev xdebug on/off/toggle— Enable, disable, or toggle Xdebugddev xdebug status— Check if Xdebug is enabledddev xdebug info— Show configuration and connection details

IDE Setup

PhpStorm

Zero-configuration debugging works out of the box:

- Run → Start Listening for PHP Debug Connections

- Set a breakpoint

- Visit your site

PhpStorm auto-detects the server and path mappings. If mappings are wrong, check Settings → PHP → Servers and verify /var/www/html maps to your project root.

The PhpStorm DDEV Integration plugin handles this automatically.

VS Code

Install the PHP Debug extension and create .vscode/launch.json:

{

"version": "0.2.0",

"configurations": [

{

"name": "Listen for Xdebug",

"type": "php",

"request": "launch",

"port": 9003,

"hostname": "0.0.0.0",

"pathMappings": {

"/var/www/html": "${workspaceFolder}"

}

}

]

}

The VS Code DDEV Manager extension can set this up for you.

WSL2 + VS Code with WSL extension: Install the PHP Debug extension in WSL, not Windows.

Common Issues

Most problems fall into a few categories. The ddev utility xdebug-diagnose tool checks for all of these automatically.

Breakpoint in code that doesn't execute: The #1 issue. Start with a breakpoint in your entry point (index.php) to confirm Xdebug works, then move to the code you actually want to debug.

IDE not listening: Make sure you've started the debug listener. PhpStorm: click the phone icon. VS Code: press F5.

Incorrect path mappings: Xdebug reports container paths (/var/www/html), and your IDE needs to map them to your local project. PhpStorm usually auto-detects this; VS Code needs the pathMappings in launch.json.

Firewall blocking the connection: Especially common on WSL2, where Windows Defender Firewall blocks connections from the Docker container. Quick test: temporarily disable your firewall. If debugging works, add a firewall rule for port 9003.

WSL2 Notes

WSL2 adds networking complexity. The most common problems:

Windows Defender Firewall blocks connections from WSL2 to Windows. Temporarily disable it to test; if debugging works, add a rule for port 9003.

Mirrored mode requires

hostAddressLoopback=trueinC:\Users\<username>\.wslconfig:[experimental] hostAddressLoopback=trueThen

wsl --shutdownto apply.IDE in WSL2 (VS Code + WSL extension): Set

ddev config global --xdebug-ide-location=wsl2

Special Cases

Container-based IDEs (VS Code Remote Containers, JetBrains Gateway):

ddev config global --xdebug-ide-location=container

Command-line debugging: Works the same way — ddev xdebug on, start your IDE listener, then ddev exec php myscript.php. Works for Drush, WP-CLI, Artisan, and any PHP executed in the container.

Debugging Composer: Composer disables Xdebug by default. Override with:

ddev exec COMPOSER_ALLOW_XDEBUG=1 composer install

Custom port: Create .ddev/php/xdebug_client_port.ini with xdebug.client_port=9000 (rarely needed).

Debugging host.docker.internal resolution: Run DDEV_DEBUG=true ddev start to see how DDEV determines the IP.

Advanced Features

xdebugctl: DDEV includes the xdebugctl utility for dynamically querying and modifying Xdebug settings, switching modes (debug, profile, trace), and more. Run ddev exec xdebugctl --help. See the xdebugctl documentation.

Xdebug map feature: Recent Xdebug versions can remap file paths during debugging, useful when container paths don't match local paths in complex ways. This complements IDE path mappings.

Performance: Xdebug adds overhead. Use ddev xdebug off or ddev xdebug toggle when you're not actively debugging.

More Information

Claude Code was used to create an initial draft for this blog, and for subsequent reviews.

read morerss

DrupalCon News & Updates: Must-See DrupalCon Chicago 2026 Sessions for Marketing and Content Leaders

If you are a marketing or content leader, DrupalCon Chicago 2026 is already calling your name. You are the special audience whose creative spark and unique perspective shine a light on Drupal in ways developers alone never could. You promote Drupal’s capabilities to the world and ensure the platform reaches the users who need it. You translate technical innovation into stories that resonate with everyone.

Drupal is increasingly built with you in mind. Making Drupal more editor‑friendly has been a clear priority in recent years. Thanks to your feedback and insights, great strides have been made in providing tools and workflows that truly support your creative vision.

This year’s DrupalCon sessions are set to spark bold insights, fresh strategies, and lively discussions. Expect those unforgettable “aha!” moments you’ll want to carry back and weave into your own marketing and content playbook. Here is a curated list of standout sessions designed to help marketing and content leaders turn inspiration into action, build meaningful connections, and discover new ways to make the most out of Drupal’s strengths.

Top DrupalCon Chicago 2026 sessions for marketing or content leaders

“Generative engine optimization tactics for discoverability” — by Jeffrey McGuire and Tracy Evans

Search Engine Optimization (SEO) has long been one of the web’s most familiar acronyms when it comes to boosting content visibility. But new times bring new terms, and it’s time to meet “GEO” (Generative Engine Optimization).

Indeed, traditional SEO alone is no longer enough in a world where tools like ChatGPT, Perplexity, and Google’s AI Overviews are everyday sources of advice. Today, SEO and GEO must work hand in hand. DrupalCon Chicago 2026 has an insightful session designed to introduce you to a new way of helping your content reach its audience in the age of AI-driven recommendations.

Join brilliant speakers, Jeffrey McGuire (horncologne) and Tracy Evans (kanadiankicks), to stay ahead of the curve. Jeffrey A. “jam” McGuire has been one of the most influential voices in the Drupal community for over two decades, recognized as a marketing strategy and communications expert. With their combined expertise, this session is tailored for marketing and content leaders who want practical, actionable guidance.

You’ll explore how to make your agency, SaaS product, or company stand out when large language models decide which names to surface. Practical strategies will follow, helping you position your expertise, strengthen credibility signals, and align your content with the data sources LLMs rely on. The session will draw from real-world research, client projects, and observations.

“Context is Everything: Configuring Drupal AI for Consistent, On-Brand Content” — by Kristen Pol and Aidan Foster

It shouldn’t come as a surprise that the next session on this list is also about AI. Of course, you already know that artificial intelligence can churn out content in seconds. But how to make sure it’s consistent with your brand’s voice, feels authentic for your organization, and resonates with your audience?

That’s where Drupal’s latest innovations, Context Control Center and Drupal Canvas, step in. Expect more exciting details at this session at DrupalCon Chicago 2026, which is a must‑see for marketing and content leaders.

This talk will be led by Kristen Pol (kristen pol) and Aidan Foster (afoster), the maintainers behind Context Control Center and Drupal Canvas. Through live demos, you’ll see landing pages, service pages, and blog posts come to life with clear context rules.

You’ll also leave with a practical starter framework for building your own context files, giving you the confidence to guide AI toward content that supports your marketing goals and strengthens your brand presence.

“From Chaos to Clarity: Building a Sustainable Content Governance Model with Drupal” — by Richard Nosek and C.J. Pagtakhan

Content chaos is something every marketing and content leader has faced: fragmented messaging, inconsistent standards, and editorial bottlenecks that slow campaigns down. At DrupalCon Chicago 2026, you’ll discover an actionable plan to make your content consistent, organized, and aligned with your brand’s goals.

Join this compelling session by Richard Nosek and C.J. Pagtakhan, seasoned experts in digital strategy. They’ll show how structured governance can scale across departments without stifling creativity. Explore workflows that make life easier for authors, editors, and administrators, including approval processes, audits, and lifecycle management. Discover clear frameworks for roles, responsibilities, and standards.

And because theory is best paired with practice, you’ll see real-world examples of how this approach improves quality, strengthens collaboration, and supports long‑term digital strategy on Drupal websites of every size and scope.

“Selling Drupal: How to win projects, and not alienate delivery teams” — by Hannah O'Leary and Hannah McDermott

Within agencies, sales and delivery departments share the same ultimate goal, client success. However, sales teams chase ambitious targets, while delivery teams focus on scope, sustainability, and the realities of open‑source implementation. Too often, this push and pull leads to friction, misaligned expectations, and even dips in client satisfaction.

At DrupalCon Chicago 2026, Hannah O’Leary hannaholeary and Hannah McDermott (hannah mcdermott) will share how they turned that challenge into a partnership at the Zoocha team. Through transparent handovers, joint scoping, and shared KPIs, they built a framework where both sides thrive together.

This session will highlight how open communication improved forecasting, reduced “us vs. them” dynamics, and directly boosted the quality of Drupal delivery. You’ll leave with practical strategies to apply in your own organization. This includes fostering empathy across teams, aligning metrics, and creating a culture of transparency.

“A Dashboard that Works: Giving Editors What They Want, But Focusing on What They Need” — by Albert Hughes and Dave Hansen-Lange

Imagine logging in and instantly seeing what matters most to your content team: recent edits, accessibility checks, broken links, permissions, and so on. That’s the power of a dashboard built not just to look good, but to truly support editors in their daily work.

Join Albert Hughes (ahughes3) and Dave Hansen-Lange (dalin) at their session as they share the journey of shaping a dashboard for 500 editors across 130 sites. You’ll hear how priorities were set, how editor needs were balanced with technical realities, and how decisions shaped a tool that keeps content teams focused and confident.

You’ll walk away with practical lessons you can apply to your own platform and a fresh perspective on how smart dashboards can empower editors and strengthen content leadership.

“Drupal CMS Spotlights” — by Gábor Hojtsy

As marketing and content leaders, you will appreciate a session on Drupal’s latest innovations that can make a difference in your work. One of the greatest presentations for this purpose at DrupalCon Chicago 2026 is the Drupal CMS Spotlights.

Drupal CMS is a curated version of Drupal packed with pre-configured features, many of which are focused on content experiences. For example, you can instantly spin up a ready-to-go blog, SEO tools, events, and more.

The session brings together key Drupal CMS leaders to share insights on recent developments and plans for the future. You’ll hear about Site Templates, the new Drupal Canvas page builder, AI, user experience, usability, documentation, and more.

Gábor Hojtsy (gábor hojtsy), Drupal core committer and initiative coordinator, is known for his engaging style, so you’ll enjoy the session even if some details get technical.

“Launching the Drupal Site Template Marketplace” — by Tim Hestenes Lehnen

For marketing and content leaders, the launch of the Drupal Site Template Marketplace is big news. Each template combines recipes (pre‑configured feature sets), demo content, and a Canvas‑compatible theme, making it faster than ever to launch a professional, polished website. For anyone focused on storytelling, campaigns, or digital experiences, this is a game‑changer.

The pilot program at DrupalCon Vienna 2025 introduced the first templates, built with the support of Drupal Certified Partners. Now, the Marketplace is expanding, offering a streamlined way to discover, select, and implement templates that align with your goals.

Join Tim Hestenes Lehnen (hestenet), a renowned Drupal core contributor, for a session that dives deeper. He’ll share lessons learned from the pilot, explain how the Marketplace connects to the roadmap for Drupal CMS and Drupal Canvas, and explore what’s next as more templates become available.

Driesnote — by Dries Buytaert

The inspiring keynote by Dries Buytaert, Drupal’s founder, is a session that can’t be missed. Driesnote closes the opening program at Chicago 2026 and sets the tone for the entire conference. It’s your perfect chance to see where Drupal is headed, and how those changes make your work easier, faster, and more creative.

At DrupalCon Vienna 2025, the main auditorium’s audience was the first to hear Dries’ announcements. Among other things, they heard about the rise in the AI Initiative funding, doubled contributions into Drupal CMS, and site templates to be found at Marketplace.

Marketers and content editors were especially amazed to see what’s becoming possible in their work: content templates in Drupal Canvas, a Context Control Center to help AI capture brand voice, and autonomous Drupal agents keeping content up to date automatically.

This year, the mystery of what’s next is yours to uncover. Follow the crowd to the main auditorium at DrupalCon Chicago and expect that signature “wow” moment that leaves the audience buzzing.

Final thoughts

Step into DrupalCon Chicago 2026 and reignite your marketing and content vision. Connect with peers, recharge your ideas, and see how Drupal continues to evolve. The sessions are designed to spark creativity and provide tools that can be put to work right away. As you head into the event, keep an open mind, lean into the conversations, and enjoy the energy that comes from sharing ideas across our amazing community.

Authored By: Nadiia Nykolaichuk, DrupalCon Chicago 2026 Marketing & Outreach Committee Member

rss

Talking Drupal: Talking Drupal #540 - Acquia Source

Today we are talking about Acquia's Fully managed Drupal SaaS Acquia Source, What you can do with it, and how it could change your organization with guest Matthew Grasmick. We'll also cover AI Single Page Importer as our module of the week.

For show notes visit: https://www.talkingDrupal.com/540

Topics- Introduction to Acquia Source

- The Evolution of Acquia Source

- Cost and Market Position of Acquia Source

- Customizing and Growing Your Business

- Challenges of Building a SaaS Platform on Drupal

- Advantages of Acquia Source for Different Markets

- Horizontal Scale and Governance at Scale

- Canvas CLI Tool and Synchronization

- Role of AI in Acquia Source

- Agencies and Enterprise Clients

- AI Experiments and Content Importer

- AI and Orchestration in Drupal

- Future Innovations in Acquia Source

Matthew Grasmick - grasmash

HostsNic Laflin - nLighteneddevelopment.com nicxvan John Picozzi - epam.com johnpicozzi Catherine Tsiboukas - mindcraftgroup.com bletch

MOTW CorrespondentMartin Anderson-Clutz - mandclu.com mandclu

- Brief description:

- Have you ever wanted to use AI to help map various content on an existing site to structured fields on Drupal site, as part of creating a node? There's a module for that.

- Module name/project name:

- Brief history

- How old: created in Jan 2026 by Mark Conroy (markconroy) who listeners may know from his work on the LocalGov distribution and install profile

- Versions available: 1.0.0-alpha3, which works with Drupal core 10 or 11

- Maintainership

- Actively maintained

- Documentation - pretty extensive README, which is also currently in use as the project page

- No issues yet

- Usage stats:

- 2 sites

- Module features and usage

- With this module enabled, you'll have a new "AI Content Import" section at the top of the node creation form. In there you can provide the URL of the existing page to use, and then click "Import Content with AI". That will trigger a process where OpenAI will ingest and analyze the existing page. It will extract values to populate your node fields, and then you can review or change those values before saving the node.

- In the configuration you can specify the AI model to use, a maximum content length, an HTTP request timeout value, which content types should have the importer available, and then also prevent abuse by specifying blocked domains, a flood limit, and a flood window. You will also need to grant a new permission to use the importer for any user roles that should have access.

- The module also includes a number of safeguards. For example, it will only accept URLs using HTTP or HTTPS protocols, private IP ranges are blocked, and by default it will only allow 5 requests per user per hour. It will perform HTML purification for long text fields, and strip tags for short text fields. In addition, it removes dangerous attributes like onclick or inline javascript, and generates CKEditor-compatible output.

- It currently supports a list of field types that include text_long, text_with_summary, string, text, datetime, daterange, timestamps and link fields. It also supports entity reference fields, but only for taxonomy terms.

- Listeners may also be aware of the Unstructured module which does some similar things, but requires you to use an Unstructured service or run a server using their software. So I would say that AI Single Page Importer is perhaps a little more narrow in scope but works with an OpenAI account instead of requiring the less commonly used Unstructured.

rss

Four Weeks of High Velocity Development for Drupal AI

While Artificial Intelligence is evolving rapidly, many applications remain experimental and difficult to implement in professional production environments. The Drupal AI Initiative addresses this directly, driving responsible AI innovation by channelling the community's creative energy into a clear, coordinated product vision for Drupal.

In this article, the third in a series, we highlight the outcomes of the latest development sprints of the Drupal AI Initiative. Part one outlines the 2026 roadmap presented by Dries Buytaert. Part two addresses the organisation and new working model for the delivery of AI functionality.

Authors: Arian, Christoph, Piyuesh, Rakhi (alphabetical)

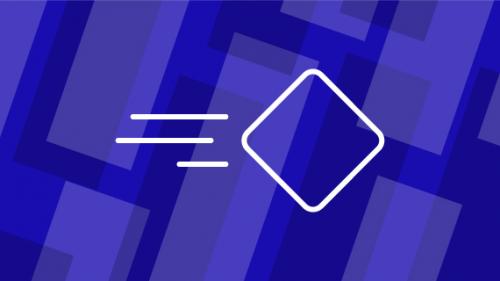

Dries Buytaert presenting the status of Drupal AI Initiative at DrupalCon Vienna 2025

Converting ambition into measurable progress

To turn the potential of AI into a reliable reality for the Drupal ecosystem, we have developed a repeatable, high-velocity production model that has already delivered significant results in its first four weeks.

A Dual-Workstream Approach to Innovation

To maximize efficiency and scale, development is organized into two closely collaborating workstreams. Together, they form a clear pipeline from exploration and prototyping to stable functionality:

- The Innovation Workstream: Led by QED42, this stream explores emerging technologies like evaluating Symfony AI, building AI-driven page creation, prompt context management, and the latest LLM capabilities to define what is possible within the ecosystem.

- The Product Workstream: Led by 1xINTERNET, this team takes proven innovations and refines, tests, and integrates them into a stable Drupal AI product ensuring they are ready for enterprise use.

Sustainable Management through the RFP Model

This structure is powered by a Request for Proposal (RFP) model, sponsored by 28 organizations partnering with the Drupal AI Initiative.

The management of these workstreams is designed to rotate every six months via a new RFP process. Currently, 1xINTERNET provides the Product Owner for Product Development and QED42 provides the Product Owner for Innovation, while FreelyGive provides core technical architecture. This model ensures the initiative remains sustainable and neutral, while benefiting from the consistent professional expertise provided by the partners of the Drupal AI Initiative.

Professional Expertise from our AI Partners

The professional delivery of the initiative is driven by our AI Partners, who provide the specialized resources required for implementation. To maintain high development velocity, we operate in two-week sprint iterations. This predictable cadence allows our partners to effectively plan their staff allocations and ensures consistent momentum.

The Product Owners for each workstream work closely with the AI Initiative Leadership to deliver on the one-year roadmap. They maintain well-prepared backlogs, ensuring that participating organizations can contribute where their specific technical strengths are most impactful.

By managing the complete development lifecycle, including software engineering, UX design, quality assurance, and peer reviews, the sprint teams ensure the delivery of stable and well-architected solutions that are ready for production environments.

The Strategic Role of AI in Drupal CMS

The work of the AI Initiative provides important functionality to the recently launched Drupal CMS 2.0. This release represents one of the most significant evolutions in Drupal’s 25-year history, introducing Drupal Canvas and a suite of AI-powered tools within a visual-first platform designed for marketing teams and site builders alike.

The strategic cooperation between the Drupal AI Initiative and the Drupal CMS team ensures that our professional-grade AI framework delivers critical functionality while aligning with the goals of Drupal CMS.

Results from our first Sprints

The initial sprints demonstrate the high productivity of this dual-workstream approach, driven directly by the specialized staff of our partnering organizations. In the first two weeks, the sprint teams resolved 143 issues, creating significant momentum right from the first sprint.

Screenshot Drupal AI Dashboard